Game of Life: multi-threaded code analysis and review

Some months ago I began experimenting with multi-threading and OpenGL. I decided that a Game of Life simulator would be a good target for my pet project, being ripe for parallel processing and requiring little graphics know-how. I’ve met my goals, so here I’ll be describing how I implemented the various features and some of the troubles I encountered.

I chose to use SDL2 as my cross-platform game programming API. It provides an interface for creating threads on a wide variety of systems, as well as enables creation of OpenGL contexts. SDL2 is still farily new, so you may need to build the library for a given Linux system and will almost certainly need to download the SDL2 runtime library for Windows machines. I’ll be pulling numerous examples from my source code, but if you wish to see them in their full context please grab the code on GitHub. You can download pre-compiled binaries and the source code here.

High-level overview of the game algorithm

You might be able to deduce how I’ve implemented my Conway’s Game of Life algorithm from the code, but it helps to get an up-front idea. Firstly, you’ll want to read this article on Conway’s Game of Life if you have no idea what this is or how you got here.

The game simulation occurs in two buffers. These will be referred to as the front buffer and the back buffer. The front buffer contains the current state of all the cells in the Game of Life world. To simulate one generation, I iterate through each cell in the front buffer. For each cell, I update the cell state with the same coordinates in the back buffer according to the game rules. Once I’ve iterated through each cell, I swap the front buffer and the back buffer, such that the front buffer now contains the data from the back buffer, and the back buffer now contains the data from the front buffer. Then repeat the process ad-infinitum.

I’ve implemented a few methods to speed each generation up. The first is based on the idea of dirty rectangles, that being that we don’t need to update any areas where nothing has changed. A cell can only become changed in a generation if one or more of it’s neighboring cells have changed state. So I subdivide the world in to regions of a given size, and mark these subdivisions as active or not active during each generation. A region is marked active if any of the cells within its border change state. If any cells on the edge of a region change state, we mark the region adjacent to it as active as well (corners included, they affect three adjacent regions). The very first generation, we simulate all regions. After the first generation, any regions which have changed state will be marked active. The number of regions active at this point will be much smaller, greatly reducing the workload. I wrote an article prior to this one that describes the idea in some detail as well.

Initialization

Let’s have a look at the main() function to get a quick idea of what’s going on when the program is started. Some segments have been omitted for clarity or emphasis, see the full source for any missing bits.

int main(int argc, char **argv) { /* omitted: parse command line arguments */ /* omitted:create a window and an OpenGL context with SDL */ ThreadedLifeContext_t *worldContext = CreateThreadedLifeContext(options.worldWidth, options.worldHeight, &options.lifeRules, options.regionSize, 0, 1, options.lifeFile, options.numThreads, options.terminationGeneration); /* omitted: error checking */ LifeGameGraphicsContext_t *graphicsContext = CreateLifeGameGraphicsContext(worldContext); /* omitted: error checking */ //create and start the simulation thread(s). SDL_Thread *lifeThread[options.numThreads]; for (int i = 0; i < options.numThreads; i++) { char threadTitle[10] = "SimThread."; //. is the spot where our ID goes threadTitle[9] = i + 65; lifeThread[i] = SDL_CreateThread((SDL_ThreadFunction)&ThreadLifeMain, threadTitle, worldContext); /* omitted: error checking */ } /* omitted keyboard input setup and graphics statistics */ while (worldContext->bRunning) { //Draw world so we can see initial state. if(SyncWorldToScreen(window, worldContext, graphicsContext, options.syncRate)) UpdateGraphicsStats(&graphicsStats); /* omitted: keyboard input and game statistics */ } /* omitted: cleanup */ } |

The first thing I do is create a new ThreadLifeContext_t structure called worldContext. This data structure contains all the necessary information for simulating the Game of Life, many of which are configurable using program arguments. In addition to the bare minimum for the “game” it contains information for splitting the simulation among multiple threads, as well as handling other optimizations such as the dirty rectangles mentioned earlier.

Next up I create a LifeGameGraphics_t structure called graphicsContext. It holds a copy of the cell-level data from our worldContext structure for use during rendering. In addition, it has data for determining the scale and translation of objects drawn.

Now we get around to actually creating some threads to run the simulation in. We use SDL_CreateThread to do this portably. You’ll notice the spawned threads begin in the function ThreadLifeMain and receive the pointer to the worldContext structure we created earlier.

Finally, the parent thread enters the main game loop. This loop handles periodically rendering the simulation state to the screen with the SyncWorldToScreen function, as well as handling keyboard input from the user.

Multi-threading concerns in a nutshell

Perhaps it’s important to first discuss some concepts of multi-threading, which may make clearer why certain things are written the way they are in my program. I’ll assume you have a fuzzy idea of what it is, but maybe not the tools used to implement it.

A race condition, as Wikipedia puts it, is “the behavior of an electronic or software system where the output is dependent on the sequence or timing of other uncontrollable events. It becomes a bug when events do not happen in the order the programmer intended”. I want to avoid race conditions. Like the plague. Not only will they break the program in very interesting ways, they’re incredibly hard to track down and debug. It’s much easier to design them out of the system before we begin.

I do this by atomically accessing shared data. An atomic operation is one that cannot be interrupted while it is in progress. I accomplish this by using a mutually exclusive lock, or mutex. Mutexes function by only allowing one thread to hold the lock at a time. Whichever thread holds the lock can execute the code contained in the critical section. The critical section is the code that can only be run by the thread holding the lock. Any code that uses shared data that must not be modified elsewhere while it is being operated on should be placed in the critical section. It’s best to keep the critical section as small as possible, because it will impact threaded performance by blocking other threads trying to gain the lock. Mutexes aren’t magic though, there’s nothing stopping another thread from modifying some variables that another thread has locked. The code must be written to strictly obey mutual exclusive access patterns that have been designed.

I recommend reading over the Wikipedia pages on race conditions and threads if you don’t already have a good idea of what multi-threading is or aren’t aware of its pitfalls.

Threaded Simulation

ThreadLifeMain is really where the magic of the simulation happens. The “game loop” for each individual thread lives here, where all the heavy lifting is done.

void ThreadLifeMain(void *worldContext) { ThreadedLifeContext_t *context = worldContext; const int localQueueSize = 32; int bGotWork = 0; Stack_t *localSimBlockQueue = NewStack(localQueueSize); while (context->bRunning) { SDL_LockMutex(context->lock); if (bGotWork == 1) { bGotWork = 0; context->numThreadsWorking--; } if (bGotWork == 0 && context->numThreadsWorking == 0 && StackIsEmpty(context->simBlockQueue)) { //if we're swapping, that's a generation! Update stats: UpdateLifeStats(context); SwapThreadedLifeContextGenerationPointers(context); ClearDirtyRegionBuffer(context->backRegions, 0); //0 means null, 1 means simulate if (context->lifeStats.totalGenerations == context->terminationGeneration) context->bRunning = 0; //any changes to world state go here before building the work queue /* omitted: code for handling commands generated by the user such as reloading the life file or randomizing, etc. */ BuildSimBlockQueues(context->simBlockQueue, context->frontRegions); } int jobsPulled; jobsPulled = 0; while (jobsPulled < localQueueSize && !StackIsEmpty(context->simBlockQueue)) { StackPush(localSimBlockQueue, StackPop(context->simBlockQueue)); jobsPulled++; bGotWork = 1; } if (!StackIsEmpty(localSimBlockQueue)) context->numThreadsWorking++; SDL_UnlockMutex(context->lock); if (!StackIsEmpty(localSimBlockQueue)) { SimWorldBlocksFromQueue(context->back, context->backRegions, context->front, context->frontRegions, localSimBlockQueue, &context->lifeRules); } } DestroyStack(localSimBlockQueue); } |

Take a moment to read over the code, even if you don’t immediately understand what’s going on. Notice that the only thing this function has access to outside of its local variables is the pointer to the external ThreadedLifeContext_t I will refer to locally as simply context, and that every created thread got the same pointer.

I begin the threaded game loop, that will remain in motion as long as the context member bRunning is non-zero. You might notice immediately that we’re reading the value of context->bRunning outside of a critical section! We don’t even ask for a lock prior to starting the loop! This is part of the design. Only the parent thread (the one handling user input) is able to change the value of this variable, so it’s safe to read from it without locking first.**

**(I think. Torn-reads are a thing, but I’m not sure how that might cause a problem with this usage. The variable represents a boolean and only ever goes from non-zero to zero on program termination, so I can’t think of a situation where a torn-read or corruption might cause particularly disastrous results. Even so, a friend recommended I declared this variable as volatile, which should instruct CPU’s not to cache the variable. These kinds of situations require special consideration, considering the way various CPU’s may or may not behave. I feel like thread-unsafe access in this situation is OK because the worst case failure scenario is a thread quitting late).

I’ll walk through what’s happening here. I enter the loop and immediately ask for the context lock. The thread will block here until it’s able to acquire the lock. Once acquired, we see if the thread has any “work” to do. If I assume the thread just started, it doesn’t have any work (this was initialized before we entered the loop). Afterwards, the thread checks if the conditions are right for the front and back buffers to be swapped. Swapping should happen when “this” thread doesn’t have any work to do, when no other threads are still simulating regions, and when there are no more unsimulated regions. This combination of factors mean a generation has been completely simulated, and it’s safe to swap the buffer. It should be pretty clear why these checks need to be inside the critical section. So I swap the buffer, and clear the region markers on the back buffer. Now I fill the shared work queue with the regions that should be simulated for this next generation. This is shared information that must be operated on serially, so the code is still in the critical section.

Now it is finished with operations that take place at the end of a generation. The thread still has a lock on the context and begins filling its local work queue with region indexes that it will simulate, up to localQueueSize or until the shared work queue for that generation becomes empty. If the local queue is empty after attempting to get work, then the thread signals that it is no longer working on simulating any regions. Finally, I release the mutex lock. After releasing the lock, the thread can begin to process its work queue in parallel with whatever the other threads are doing. It will simulate regions until the local queue becomes empty, then loop around again.

You might be asking why we don’t need to lock access to the buffers containing cell data, or the region buffers except for when swapping their respective pointers from front to back. This is because each thread fills its local work queue with the indices of individual regions that only it will work on during a given generation. No two threads get assigned the same region to simulate, so they are certain not to modify the same parts of memory simultaneously.

Performance?

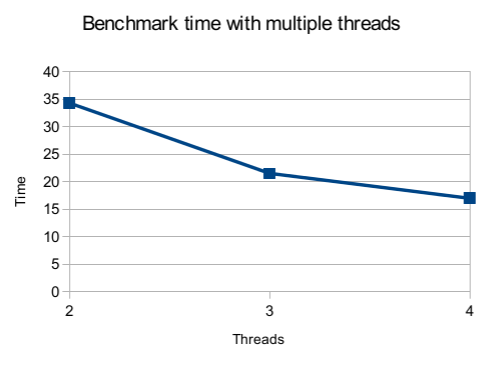

This implementation significantly increased the number of generations per second my simulator can compute when running on more than two CPU cores. I don’t have more than four cores in any of my machines, so I can’t really measure how well my implementation scales just yet. I tested a simple space-filler pattern set to stop at 3500 genrations. This is how it measures up with two, three, and four threads:

The command I used to benchmark the simulator was this:

time ./gameoflife -l data/spacefiller.life -g 3500 -t 2 -w 1600 -h 1200

Where the value after “-t” varied depending on how many threads I was running with. This was tested with the build initially released when this article was published.

You can download the source code from GitHub.

Or you can download the source plus binaries for Windows and Linux here.

The binaries only include a 32-bit Windows build, and a 64-bit Linux build that have been tested on Windows 7 64-bit, and Ubuntu 13.10 64-bit respectively.